DRAM vs NAND: Understanding the Two Memory Technologies Powering Modern Computing

Modern computing relies on two foundational memory technologies that serve very different but equally critical purposes. DRAM and NAND memory work together to deliver speed, efficiency, and data persistence across devices ranging from smartphones to hyperscale data centers. Understanding how DRAM vs NAND differs is essential for anyone involved in technology strategy, system design, or digital transformation.

Table of Contents

- What Is DRAM?

- What Is NAND?

- Key Differences Between DRAM and NAND

- Performance and Latency Comparison

- Cost, Density, and Scalability

- Real-World Use Cases

- The Future of Memory Technologies

- Top 5 Frequently Asked Questions

- Final Thoughts

- Resources

What Is DRAM?

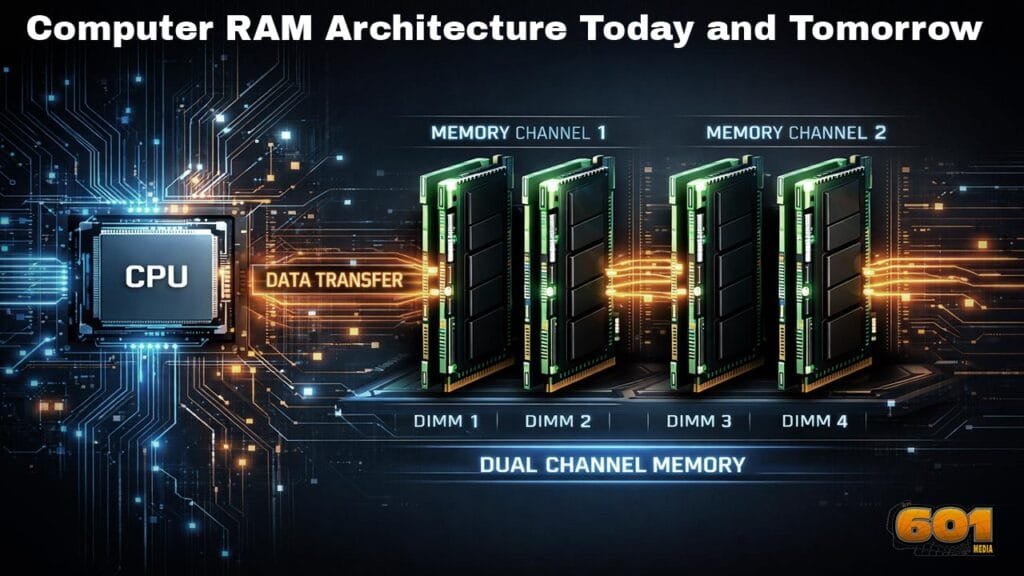

Dynamic Random Access Memory, commonly known as DRAM, is volatile memory designed for speed. It temporarily stores data that a processor actively uses, enabling near-instant access to instructions and applications. Because DRAM is volatile, it loses all stored data when power is removed.

DRAM operates by storing bits in capacitors that must be refreshed thousands of times per second. This refresh requirement increases power consumption but allows DRAM to achieve extremely low latency. Typical access times are measured in nanoseconds, making DRAM indispensable for real-time computing tasks.

In modern systems, DRAM functions as system memory, enabling multitasking, application execution, and responsive user experiences.

What Is NAND?

NAND flash memory is non-volatile storage designed for data persistence. Unlike DRAM, NAND retains data even when power is lost. This characteristic makes it ideal for long-term storage in solid-state drives, smartphones, USB drives, and embedded systems.

NAND stores data in floating-gate transistors arranged in blocks and pages. While access latency is higher than DRAM, NAND compensates with massive storage density and lower cost per bit. NAND memory is also scalable, with multi-terabyte drives becoming standard in enterprise environments.

NAND technologies include SLC, MLC, TLC, and QLC, each balancing endurance, speed, and capacity differently.

Key Differences Between DRAM and NAND

The distinction between DRAM vs NAND lies in purpose rather than superiority.

DRAM prioritizes speed and low latency, making it ideal for active workloads. NAND prioritizes persistence and density, enabling long-term storage. DRAM requires constant power and refresh cycles, while NAND does not.

DRAM is expensive per gigabyte but delivers unmatched performance. NAND is cost-effective at scale but slower by comparison.

Performance and Latency Comparison

Performance is where DRAM clearly dominates. DRAM latency averages between 10 to 20 nanoseconds, while NAND latency ranges from microseconds to milliseconds depending on workload and NAND type.

This gap explains why operating systems load applications into DRAM after retrieving them from NAND-based storage. NAND excels at throughput and capacity, but DRAM enables instantaneous execution.

In data centers, this performance hierarchy directly impacts application responsiveness, AI model inference speed, and database transaction times.

Cost, Density, and Scalability

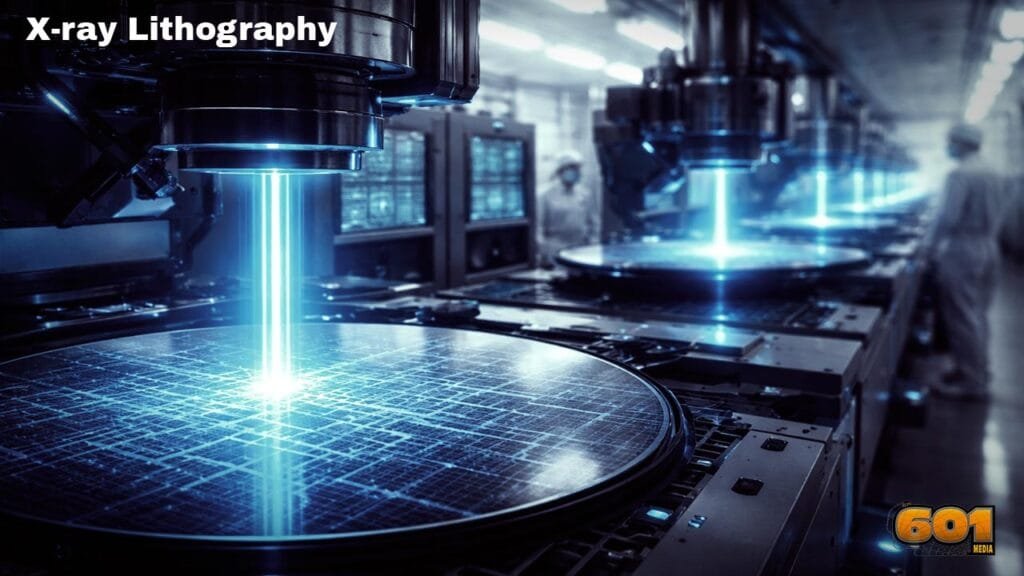

Cost efficiency is NAND’s strongest advantage. NAND memory can store far more data in the same physical space compared to DRAM. Advances such as 3D NAND stacking have dramatically increased density while reducing cost per bit.

DRAM scaling faces physical limitations related to capacitor size and power leakage. As a result, DRAM prices remain significantly higher and more volatile than NAND.

For organizations managing large-scale infrastructure, this cost disparity drives architectural decisions such as memory tiering and caching strategies.

Real-World Use Cases

DRAM is used wherever speed is mission-critical. This includes system memory, graphics memory, high-frequency trading systems, and AI accelerators.

NAND dominates persistent storage. Consumer devices rely on NAND for photos, videos, and applications. Enterprises depend on NAND-based SSDs for databases, cloud storage, and backup systems.

Modern architectures increasingly combine both technologies through techniques such as RAM caching, NVMe storage, and persistent memory layers.

The Future of Memory Technologies

The boundary between DRAM and NAND is beginning to blur. Emerging technologies such as storage-class memory aim to bridge the performance gap while maintaining persistence.

At the same time, demand for AI workloads, edge computing, and real-time analytics continues to push both DRAM and NAND innovation. Industry investment remains strong, with billions allocated annually to memory research and fabrication.

Despite emerging alternatives, DRAM and NAND will remain foundational for the foreseeable future.

Top 5 Frequently Asked Questions

Final Thoughts

The DRAM vs NAND comparison is not about choosing one over the other. It is about understanding how each fulfills a distinct role in modern computing architectures. DRAM delivers speed and responsiveness. NAND delivers scale and persistence.

Together, they form a performance hierarchy that enables everything from mobile apps to enterprise cloud platforms. Strategic technology decisions depend on leveraging both efficiently, not replacing one with the other.

Resources

- JEDEC Solid State Technology Association – Memory Standards

- IEEE Computer Society – Memory Architecture Research

- McKinsey Global Semiconductor Report

- Statista – Global Memory Market Data

I am a huge enthusiast for Computers, AI, SEO-SEM, VFX, and Digital Audio-Graphics-Video. I’m a digital entrepreneur since 1992. Articles include AI assisted research. Always Keep Learning! Notice: All content is published for educational and entertainment purposes only. NOT LIFE, HEALTH, SURVIVAL, FINANCIAL, BUSINESS, LEGAL OR ANY OTHER ADVICE. Learn more about Mark Mayo