Computer RAM Architecture Today and Tomorrow

Modern computing performance is shaped as much by memory architecture as by raw processing power. Random Access Memory, commonly referred to as RAM, sits at the center of this performance equation. It bridges the speed gap between ultra-fast processors and comparatively slower storage, directly influencing responsiveness, efficiency, and scalability. This article explores how RAM architecture works today, the engineering trade-offs behind current designs, and how emerging technologies will redefine memory systems over the next decade.

Table of Contents

- Foundations of Modern RAM Architecture

- How Contemporary DRAM Systems Work

- RAM Within the Memory Hierarchy

- Current Performance Bottlenecks

- The Evolution of DDR Standards

- Specialized RAM for GPUs, AI, and Servers

- Energy Efficiency and Thermal Constraints

- Emerging RAM Technologies

- Future Memory Architectures

- Industry and Application Impact

- Top 5 Frequently Asked Questions

- Final Thoughts

- Resources

Foundations of Modern RAM Architecture

RAM is volatile memory designed to store data that a processor needs immediate access to. Unlike persistent storage, RAM prioritizes speed over durability. Modern RAM architecture balances four competing factors: latency, bandwidth, density, and power consumption. Each design decision reflects trade-offs between these parameters.

At a physical level, most system RAM today is based on Dynamic Random Access Memory, or DRAM. DRAM stores each bit of data in a tiny capacitor paired with a transistor. These capacitors leak charge over time, requiring periodic refresh cycles to maintain data integrity. This refresh requirement is a fundamental architectural constraint that shapes performance and energy usage.

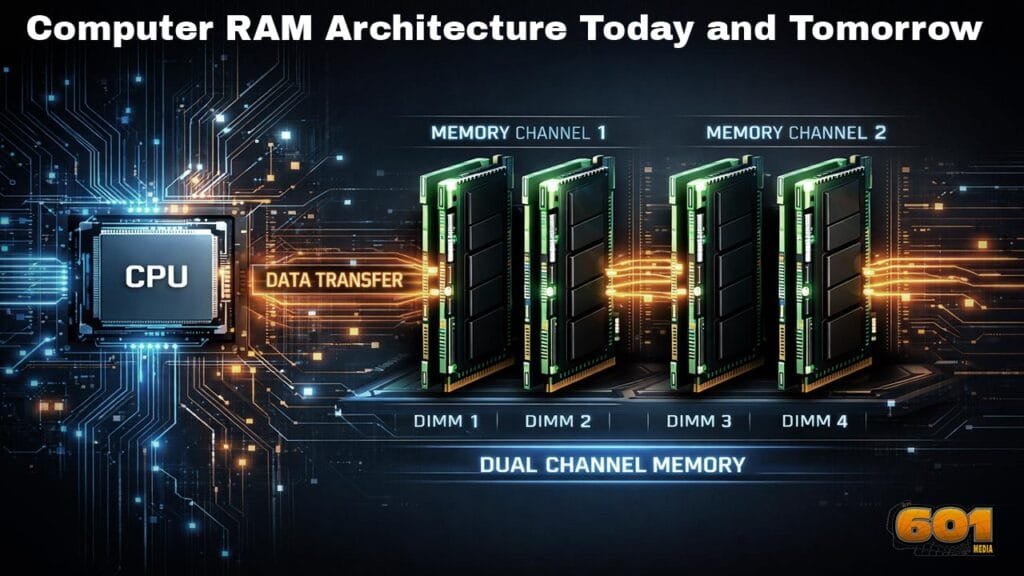

Logically, RAM is organized into channels, ranks, banks, rows, and columns. This hierarchical organization allows parallel access patterns but also introduces timing constraints. Memory controllers must carefully schedule reads, writes, and refresh operations to avoid conflicts and minimize latency.

How Contemporary DRAM Systems Work

Modern DRAM modules are not simple linear memory arrays. They consist of multiple banks that allow concurrent operations. Each bank contains thousands of rows, and accessing data involves activating an entire row into a row buffer before column-level reads or writes can occur.

This architecture gives rise to key performance metrics such as CAS latency, row activation time, and precharge delay. While headline memory speeds often emphasize data rate in megatransfers per second, real-world performance depends heavily on how efficiently workloads align with DRAM access patterns.

Memory controllers, now integrated directly into CPUs, play a critical role. They manage address translation, command scheduling, and error detection. Advanced controllers can reorder memory requests, predict access patterns, and interleave operations across channels to maximize throughput.

RAM Within the Memory Hierarchy

RAM does not operate in isolation. It sits between processor caches and long-term storage within a layered memory hierarchy. CPU caches offer nanosecond-level access but are limited in size. RAM provides larger capacity at higher latency, while solid-state drives offer persistence with far slower access times.

This hierarchy exists because no single memory technology can simultaneously deliver ultra-low latency, massive capacity, non-volatility, and low cost. RAM’s architectural role is to serve as a high-speed workspace that feeds data to processors faster than storage devices can.

As processors gain more cores, the pressure on RAM bandwidth increases. Multi-core and many-core systems generate parallel memory requests that stress traditional memory architectures, leading to contention and diminishing returns without architectural innovation.

Current Performance Bottlenecks

Despite advances in memory speeds, RAM latency has improved far more slowly than CPU performance. This growing gap is often referred to as the memory wall. While processors have become exponentially faster, memory access times have not kept pace at the same rate.

Another bottleneck lies in memory bandwidth saturation. High-performance workloads such as data analytics, machine learning, and real-time simulation consume massive amounts of data. Even with multi-channel memory configurations, bandwidth can become a limiting factor.

Additionally, refresh overhead increases as memory density grows. Larger DRAM chips require more frequent refresh cycles, consuming power and reducing available bandwidth. These factors collectively constrain system scalability.

The Evolution of DDR Standards

Double Data Rate memory standards have evolved to address bandwidth and efficiency challenges. Each generation increases data transfer rates while reducing operating voltage. DDR4 introduced bank groups to improve parallelism, while DDR5 expanded this concept further by doubling the number of banks and introducing on-die error correction.

DDR5 also shifts more power management responsibility onto the memory module itself. This change improves scalability but increases design complexity. Higher frequencies introduce signal integrity challenges, requiring more sophisticated motherboard layouts and memory training algorithms.

Looking ahead, future DDR standards will likely continue this trend, emphasizing parallelism and energy efficiency rather than dramatic latency reductions.

Specialized RAM for GPUs, AI, and Servers

Not all RAM is designed for general-purpose computing. Graphics processing units rely on high-bandwidth memory systems optimized for parallel throughput rather than low latency. These systems use wide interfaces and stacked memory dies to achieve exceptional data rates.

In data centers, registered and load-reduced memory modules improve signal stability and support larger capacities. Error correction is mandatory in these environments, as even minor memory faults can lead to catastrophic system failures.

Artificial intelligence workloads increasingly demand memory architectures that can feed accelerators without bottlenecks. This has driven innovation in memory packaging and interconnects, blurring the line between memory and compute.

Energy Efficiency and Thermal Constraints

Power consumption has become a primary design constraint in modern RAM architecture. Data centers spend a significant portion of their energy budget on memory systems. Mobile devices face even stricter limits, as battery life directly impacts user experience.

Reducing voltage helps, but it also narrows noise margins and increases error susceptibility. Designers must carefully balance power savings against reliability. Advanced power-down modes, adaptive refresh rates, and fine-grained voltage control are now standard features.

Thermal considerations also shape physical layouts. Dense memory configurations generate heat that must be dissipated without increasing system complexity or cost.

Emerging RAM Technologies

Several emerging memory technologies aim to overcome the limitations of conventional DRAM. These include non-volatile memories that promise near-DRAM speeds with persistent storage capabilities.

Some approaches focus on replacing the capacitor-based DRAM cell entirely, while others augment existing architectures with hybrid memory layers. These technologies could reduce refresh overhead, improve density, and enable new system architectures.

However, challenges remain in manufacturing scalability, endurance, and cost. Adoption will depend on whether these technologies can integrate seamlessly into existing memory ecosystems.

Future Memory Architectures

The future of RAM architecture is likely to involve tighter integration with processors. Concepts such as near-memory and in-memory computing aim to reduce data movement, which is one of the largest contributors to energy consumption.

Chiplet-based designs allow memory and compute components to be combined in flexible configurations. This modular approach improves yield, scalability, and customization. Vertical stacking further shortens interconnect distances, reducing latency and power usage.

Software will also play a larger role. Memory-aware programming models and intelligent runtime systems can optimize data placement and access patterns, extracting more performance from existing hardware.

Industry and Application Impact

Advances in RAM architecture will influence nearly every computing domain. Consumer devices will benefit from faster multitasking and improved battery life. Enterprises will gain higher data throughput and reduced operational costs.

Emerging applications such as autonomous systems, real-time analytics, and immersive virtual environments depend on low-latency, high-bandwidth memory. Memory architecture will increasingly define system capabilities rather than simply supporting them.

Organizations that understand and adapt to these trends will be better positioned to leverage next-generation computing platforms.

Top 5 Frequently Asked Questions

Final Thoughts

RAM architecture is no longer a background consideration in system design. It is a defining factor in performance, efficiency, and scalability. Today’s memory systems reflect decades of incremental optimization, but they are approaching fundamental physical limits. The next era will be shaped by architectural innovation, tighter integration, and smarter software-hardware collaboration. Understanding where RAM has been and where it is going is essential for anyone building or relying on modern computing systems.

Resources

- JEDEC Solid State Technology Association – Memory Standards Documentation

- Computer Architecture: A Quantitative Approach by Hennessy and Patterson

- IEEE Micro and ACM Computing Surveys on Memory Systems

- Industry whitepapers from leading semiconductor manufacturers

I write for and assist as the editor-in-chief for 601MEDIA Solutions. I’m a digital entrepreneur since 1992. Articles may include AI assisted research. Always Keep Learning! Notice: All content is published for educational and entertainment purposes only. NOT LIFE, HEALTH, SURVIVAL, FINANCIAL, BUSINESS, LEGAL OR ANY OTHER ADVICE. Learn more about Mark Mayo