What Does MCP (Model Context Protocol) Do?

The Model Context Protocol (MCP) is an emerging open standard that allows large language models (LLMs) to securely and consistently access external tools, data sources, and services. It solves a core limitation of modern AI systems: the inability to reliably interact with real-world systems without brittle, one-off integrations.

Table of Contents

- What Is the Model Context Protocol?

- Why MCP Was Created

- How MCP Works at a Technical Level

- MCP vs Plugins and APIs

- Business and Enterprise Value of MCP

- Real-World Use Cases

- The Future of MCP

- Top 5 Frequently Asked Questions

- Final Thoughts

- Resources

What Is the Model Context Protocol?

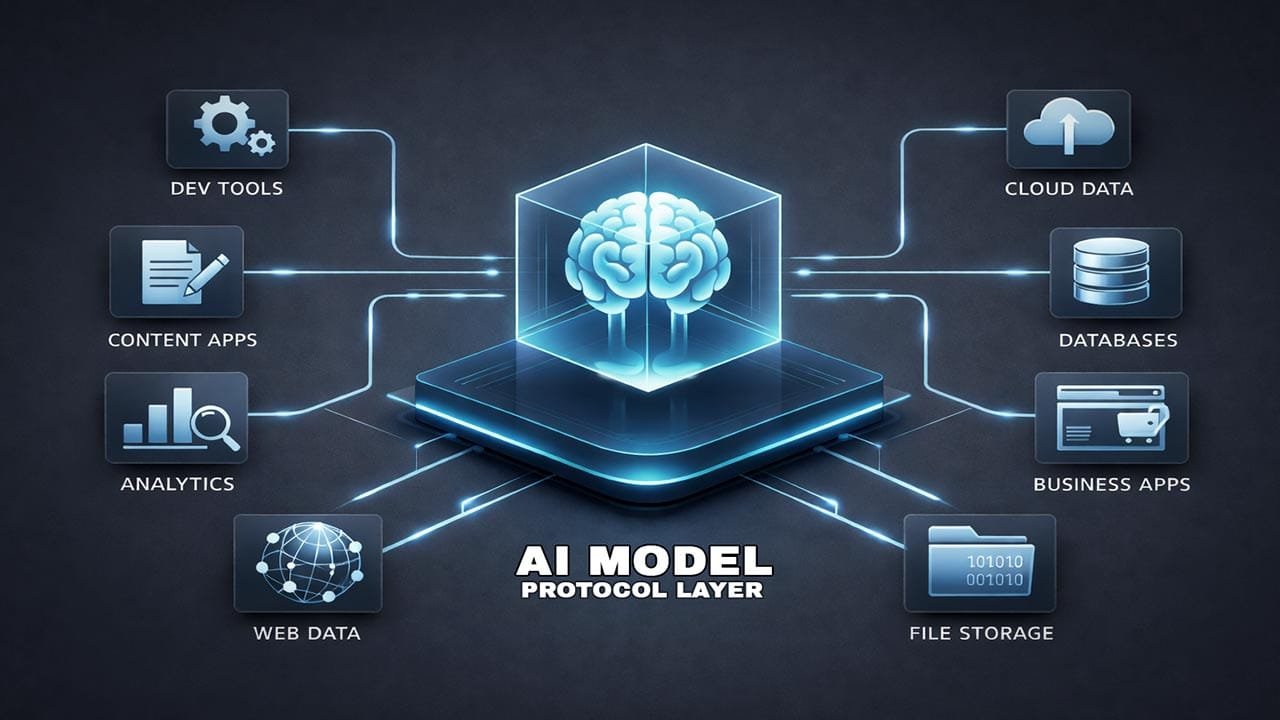

The Model Context Protocol (MCP) is an open protocol that standardizes how AI models connect to external systems such as databases, internal tools, SaaS platforms, file systems, and APIs. Instead of hard-coding integrations into each AI application, MCP creates a shared communication layer between models and tools.

In simple terms, MCP acts as a universal adapter. It tells an AI model what tools exist, what they can do, and how to use them safely, all in a structured, machine-readable way.

MCP was introduced by Anthropic to address a growing ecosystem problem: every AI vendor and developer was building custom connectors, creating fragmentation, security risks, and high maintenance costs.

Why MCP Was Created

Modern LLMs are powerful reasoning engines, but they are isolated by default. They do not inherently know how to:

- Query live databases

- Access private company data

- Trigger workflows or automate tasks

- Respect enterprise security boundaries

Before MCP, developers relied on plugins, tool-specific prompts, or custom middleware. These approaches created three systemic problems.

First, integrations were brittle. Small API changes could silently break AI behavior.

Second, security was inconsistent. Many solutions relied on prompt-based permissions rather than enforceable access controls.

Third, scaling was inefficient. Every new model or tool required a new integration.

MCP was designed to solve all three by introducing a clean separation between AI reasoning and system access.

How MCP Works at a Technical Level

MCP follows a client–server architecture.

The MCP server exposes tools, resources, and capabilities. These may include:

- Functions such as “create ticket” or “run query”

- Structured data sources like documents or logs

- Stateful tools such as terminals or file systems

The MCP client, usually embedded in an AI application, requests context from the server. The server responds with a structured description of available tools, input schemas, and constraints.

The model does not directly call APIs. Instead, it reasons over the provided context and issues structured tool calls that the client executes.

This design creates three critical advantages:

- Models remain stateless and portable

- Security policies live outside the model

- Tools can be reused across models

MCP vs Plugins and Traditional APIs

AI plugins and MCP may look similar on the surface, but they solve different problems.

Plugins are typically model-specific. They rely on prompts and UI-level agreements that vary across platforms.

MCP is model-agnostic. Any compliant model can consume MCP context without retraining or prompt hacking.

Traditional APIs require developers to write glue code and embed logic into applications. MCP abstracts that layer, allowing AI systems to dynamically discover and use tools at runtime.

From an architecture perspective, MCP shifts AI integration from application code to infrastructure design.

Business and Enterprise Value of MCP

For enterprises, MCP directly reduces operational risk.

Security teams gain centralized control over what AI systems can access. Permissions are enforced at the protocol level, not inferred from prompts.

Engineering teams reduce duplication. A single MCP server can support multiple AI assistants, agents, and workflows.

Executives gain faster time to value. New tools become instantly usable by AI systems without reengineering.

According to early enterprise pilots, standardizing AI integrations can reduce maintenance overhead by over 30 percent while improving compliance auditability.

Real-World Use Cases

MCP enables high-impact use cases that were previously fragile or unsafe.

In software development, AI assistants can securely read repositories, run tests, and file issues without direct API exposure.

In customer support, models can access CRM systems, billing platforms, and knowledge bases through a unified interface.

In operations, AI agents can monitor logs, query metrics, and trigger remediation workflows in real time.

The key shift is reliability. AI behavior becomes predictable because tool access is structured, not improvised.

The Future of MCP

MCP is still early, but its trajectory mirrors other successful infrastructure standards.

As adoption grows, expect:

- A marketplace of reusable MCP servers

- Cross-vendor interoperability

- Stronger governance and auditing features

In the long term, MCP positions AI systems less like chatbots and more like digital operators embedded into enterprise workflows.

Top 5 Frequently Asked Questions

Final Thoughts

The most important takeaway is that MCP is not about making AI smarter. It is about making AI usable at scale.

By separating reasoning from access, MCP turns fragile AI demos into dependable systems. As AI shifts from experimentation to infrastructure, protocols like MCP will define who scales safely and who does not.

Resources

- Anthropic – Model Context Protocol documentation

- AI Infrastructure Architecture Whitepapers

- Enterprise AI Security Research Reports

I am a huge enthusiast for Computers, AI, SEO-SEM, VFX, and Digital Audio-Graphics-Video. I’m a digital entrepreneur since 1992. Articles include AI assisted research. Always Keep Learning! Notice: All content is published for educational and entertainment purposes only. NOT LIFE, HEALTH, SURVIVAL, FINANCIAL, BUSINESS, LEGAL OR ANY OTHER ADVICE. Learn more about Mark Mayo