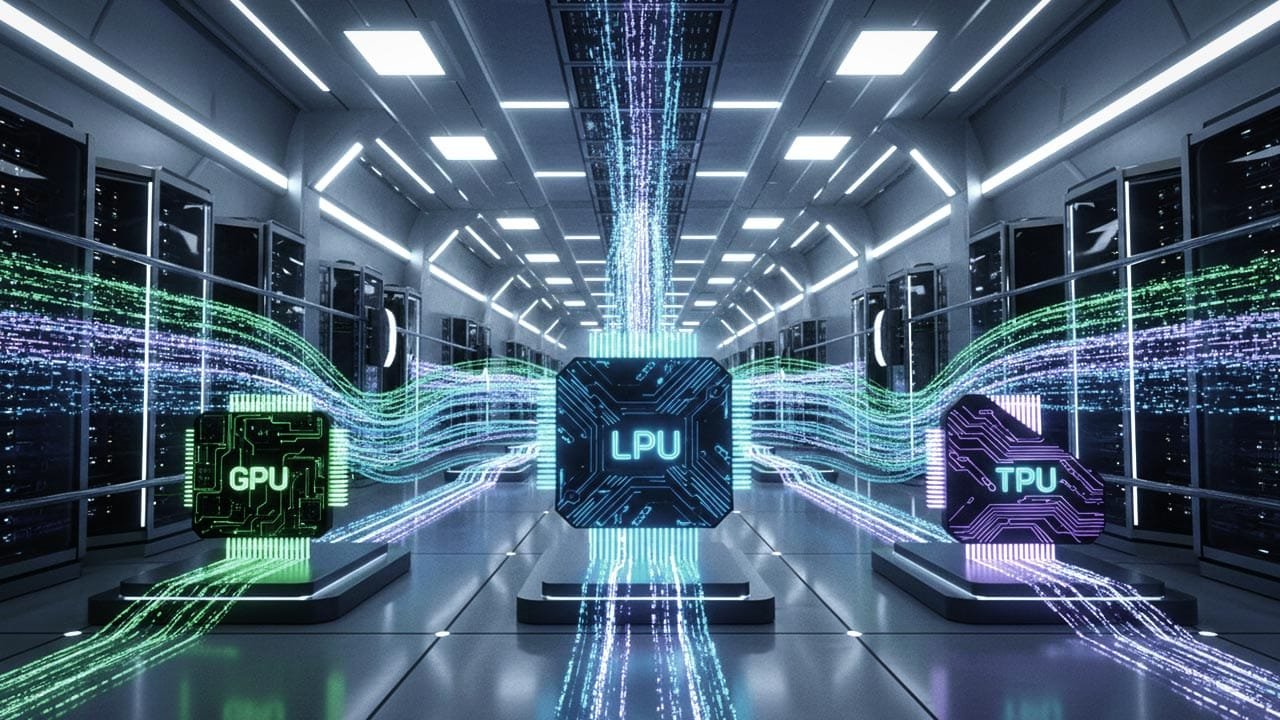

GPU vs TPU vs LPU: A Technical Guide to AI Hardware

AI hardware can feel intimidating at first, like walking into a city where every street sign is written in acronyms. GPUs, TPUs, LPUs, FLOPS, tensors, latency, throughput. It is a lot. Take a breath. You are not behind. You are exactly where you should be. By the end you will confidently know what GPUs, TPUs, and LPUs are, how they differ, and when each one actually makes sense.

Table of Contents

- Why AI Hardware Matters More Than Ever

- GPU Explained Like a Friendly Multitool

- TPU Explained Like a Custom-Built Factory

- LPU Explained Like a High-Speed Train

- Architectural Differences That Actually Matter

- Training vs Inference: Who Does What Best

- Latency vs Throughput Without the Headache

- Cost and Energy Efficiency Reality Check

- Real-World Use Cases and Examples

- Where AI Hardware Is Heading Next

- Top 5 Frequently Asked Questions

- Final Thoughts

- Resources

Why AI Hardware Matters More Than Ever

AI models today are enormous. They are not just big, they are “needs-a-small-power-plant” big. Training a modern large language model can require billions or even trillions of calculations, repeated over and over, until the model finally stops embarrassing itself.

Hardware determines how fast this happens, how much it costs, and whether your AI feels snappy or painfully slow. Choosing the wrong hardware is like trying to move apartments using a bicycle. Technically possible. Emotionally devastating.

This is why GPUs, TPUs, and LPUs exist. Each one is optimized for a different way of thinking, moving data, and crunching numbers.

GPU Explained Like a Friendly Multitool

A GPU, or Graphics Processing Unit, started life drawing video game explosions and movie dragons. Somewhere along the way, researchers realized GPUs are fantastic at doing the same math operation thousands of times in parallel. AI looked at that and said, “Yes, I will take all of that.”

Imagine a GPU as a giant kitchen with thousands of chefs. Each chef is not very fancy. They only know how to chop carrots. But if you need to chop ten thousand carrots, this kitchen becomes unstoppable.

GPUs excel at parallelism. Neural networks are basically giant math soups where the same operations happen again and again across massive matrices. GPUs eat this for breakfast.

Companies like NVIDIA built entire software ecosystems around this idea. CUDA, cuDNN, and TensorRT turned GPUs into a full-stack AI platform. The result is that GPUs became the default brain of modern AI.

Strengths of GPUs include flexibility, massive developer support, and strong performance for both training and inference. Weaknesses include high power consumption and sometimes inefficient use for very specific workloads.

TPU Explained Like a Custom-Built Factory

TPUs, or Tensor Processing Units, are what happens when you look at GPUs and say, “Nice, but what if we built something that only does AI math and nothing else.”

Developed by Google, TPUs are application-specific integrated circuits designed specifically for tensor operations. They are not generalists. They are specialists. They do not chop carrots. They assemble cars on a perfectly tuned assembly line.

TPUs shine in environments where workloads are predictable and standardized, especially inside Google’s own infrastructure. They are incredibly efficient for large-scale training and inference when models fit their expected patterns.

The tradeoff is flexibility. You do not tinker with a TPU the way you do with a GPU. TPUs like rules. They like structure. They like knowing what tomorrow looks like.

LPU Explained Like a High-Speed Train

LPUs, or Language Processing Units, are the new kids on the block, popularized by companies like Groq. LPUs are built with one thing in mind: inference speed and predictability.

If GPUs are kitchens and TPUs are factories, LPUs are bullet trains. They do not stop often. They do not change tracks. They go very fast in one direction. LPUs focus on deterministic execution. This means they deliver consistent, ultra-low latency responses. When a user asks a question, the model answers immediately, not “eventually, depending on what else is happening.” This makes LPUs incredibly attractive for real-time AI applications like chatbots, voice assistants, and interactive systems where delays feel personal.

Architectural Differences That Actually Matter

Under the hood, GPUs rely on massive parallel threads, TPUs rely on systolic arrays optimized for tensor math, and LPUs rely on carefully orchestrated instruction pipelines. Here is the friendly takeaway. GPUs are flexible multitaskers. TPUs are disciplined specialists. LPUs are speed demons with a single obsession. Each architecture optimizes memory movement differently. GPUs juggle memory constantly. TPUs minimize movement through tightly coupled design. LPUs reduce unpredictability by controlling execution paths.

Training vs Inference: Who Does What Best

Training is the gym. Inference is game day. GPUs dominate training because flexibility matters when models are changing constantly. TPUs are exceptional for large-scale, repeated training jobs where efficiency compounds. LPUs are not designed for training at all. They show up fresh on game day and perform. For inference, LPUs shine in latency-sensitive environments. GPUs handle mixed workloads well. TPUs deliver massive throughput when batching requests.

Latency vs Throughput Without the Headache

Latency is how long one request takes. Throughput is how many requests you can handle at once. LPUs are latency kings. GPUs are throughput all-rounders. TPUs are throughput monsters. If latency were a pizza delivery, LPUs arrive hot in ten minutes. GPUs bring five pizzas in thirty minutes. TPUs bring a truckload if you ordered ahead.

Cost and Energy Efficiency Reality Check

GPUs are expensive and power-hungry, but widely available. TPUs are cost-effective at scale but mostly locked into Google’s ecosystem. LPUs promise excellent performance per watt but are still maturing in availability. Energy efficiency is becoming a deciding factor. AI power bills are no joke. Hardware choices now affect sustainability as much as performance.

Real-World Use Cases and Examples

GPUs dominate research labs, startups, and mixed workloads. TPUs dominate hyperscale AI services. LPUs dominate real-time user-facing AI where speed feels magical. There is no universal winner. Only the right tool for the job.

Where AI Hardware Is Heading Next

The future is heterogeneous. AI systems will mix GPUs, TPUs, LPUs, and domain-specific accelerators. Software will decide dynamically where workloads go. Think less “which chip wins” and more “which orchestra sounds best together.”

Top 5 Frequently Asked Questions

Final Thoughts

Here is the most important takeaway. AI hardware is not about winning. It is about fit. GPUs are your reliable Swiss Army knife. TPUs are your industrial assembly line. LPUs are your high-speed express lane. When you understand the personality of each, the confusion melts away. And once that happens, AI stops feeling intimidating and starts feeling exciting again.

I am a huge enthusiast for Computers, AI, SEO-SEM, VFX, and Digital Audio-Graphics-Video. I’m a digital entrepreneur since 1992. Articles include AI assisted research. Always Keep Learning! Notice: All content is published for educational and entertainment purposes only. NOT LIFE, HEALTH, SURVIVAL, FINANCIAL, BUSINESS, LEGAL OR ANY OTHER ADVICE. Learn more about Mark Mayo